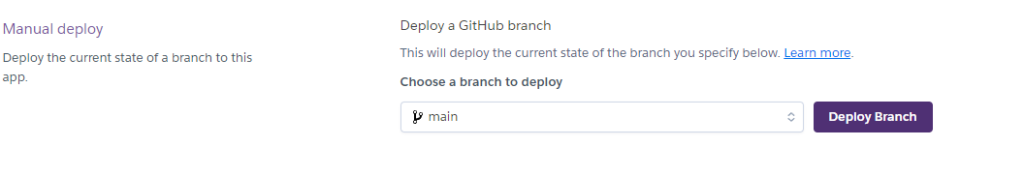

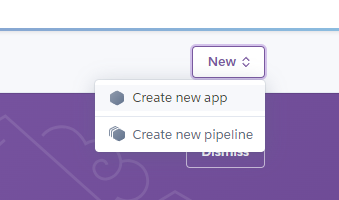

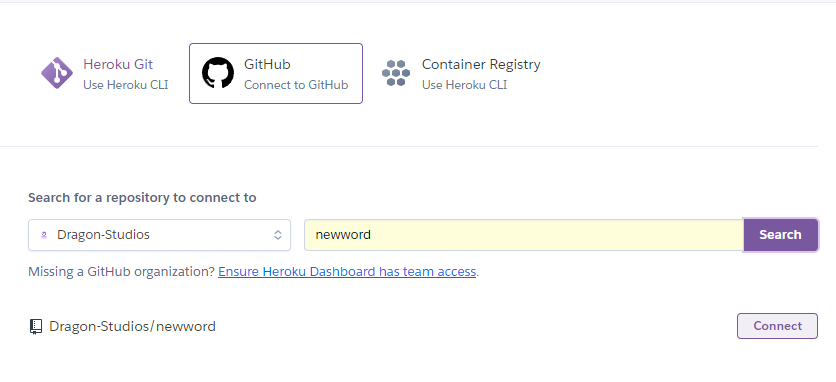

Primeiro vai precisar entrar no site do heroku e criar um app e dar deploy com seu repositório do github e criar o app por lá:

Agora vamos instalar o AWS CLI e o Heroku CLI na máquina para executar alguns comandos:

https://aws.amazon.com/cli/

https://devcenter.heroku.com/articles/heroku-cliVamos criar o arquivo na raiz do projeto Procfile e colocar o conteúdo:

web: gunicorn project.wsgi:application --log-file - --log-level debugCriar o arquivo runtime.txt

python-3.12.7Agora vamos logar o heroku:

heroku loginAgora é preciso instalar o add-ons do servidor estático:

heroku addons:create bucketeer:hobbyist --app <NOME_APP_HEROKU>Agora é preciso instalar o add-ons do banco de dados:

heroku addons:create heroku-postgresql:essential-0 --app <NOME_APP_HEROKU>

Criando as variáveis ambientes:

heroku config:set DEBUG=False --app <NOME_APP_HEROKU>

heroku config:set PRODUCTION=True --app <NOME_APP_HEROKU>Precisamos instalar os pacotes, adicionar no requirements.txt:

django-storages

boto3

python-decouple

gunicorn

dj-database-url

psycopg2

sorl-thumbnail

Django

pillowAdd storages to the INSTALLED_APPS in settings.py:

INSTALLED_APPS = [

'storages',

]Configurando settings.py para o banco de dados:

if PRODUCTION:

DATABASES = {

'default': dj_database_url.config(default=os.getenv("DATABASE_URL"))

}

else:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.sqlite3',

'NAME': BASE_DIR / 'db.sqlite3',

}

}Configurar o urls.py para funcionar os arquivos estáticos locais

from django.conf.urls.static import static

from django.conf import settings

if settings.LOCAL_SERVE_STATIC_FILES:

urlpatterns += static(settings.STATIC_URL, document_root=settings.STATIC_ROOT)

if settings.LOCAL_SERVE_MEDIA_FILES:

urlpatterns += static(settings.MEDIA_URL, document_root=settings.MEDIA_ROOT)Precisamos configurar as variáveis ambiente do servidor bucketeer no settings.py

MEDIA_URL = '/media/'

STATIC_URL = '/static/'

STATIC_ROOT = BASE_DIR / 'static'

S3_ENABLED = config('S3_ENABLED', cast=bool, default=True)

LOCAL_SERVE_MEDIA_FILES = config('LOCAL_SERVE_MEDIA_FILES', cast=bool, default=not S3_ENABLED)

LOCAL_SERVE_STATIC_FILES = config('LOCAL_SERVE_STATIC_FILES', cast=bool, default=not S3_ENABLED)

if (not LOCAL_SERVE_MEDIA_FILES or not LOCAL_SERVE_STATIC_FILES) and not S3_ENABLED:

raise ValueError('S3_ENABLED must be true if either media or static files are not served locally')

if S3_ENABLED:

AWS_ACCESS_KEY_ID = config('BUCKETEER_AWS_ACCESS_KEY_ID', default='AKIARVGPJVYVA4PIBLYL')

AWS_SECRET_ACCESS_KEY = config('BUCKETEER_AWS_SECRET_ACCESS_KEY', default='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')

AWS_STORAGE_BUCKET_NAME = config('BUCKETEER_BUCKET_NAME', default='bucketeer-7585b93e-52a4-47dd-8126-122786b69f94')

AWS_S3_REGION_NAME = config('BUCKETEER_AWS_REGION', default='us-east-1')

AWS_DEFAULT_ACL = 'public-read'

AWS_S3_SIGNATURE_VERSION = config('S3_SIGNATURE_VERSION', default='s3v4')

AWS_S3_ENDPOINT_URL = f'https://{AWS_STORAGE_BUCKET_NAME}.s3.amazonaws.com'

AWS_S3_OBJECT_PARAMETERS = {'CacheControl': 'max-age=86400'}

else:

STATICFILES_DIRS = [

os.path.join(BASE_DIR, 'project', 'static'),

]

MEDIA_ROOT = os.path.join(BASE_DIR, 'media')

if not LOCAL_SERVE_STATIC_FILES:

STATIC_DEFAULT_ACL = 'public-read'

STATIC_LOCATION = 'static'

STATIC_URL = f'{AWS_S3_ENDPOINT_URL}/{STATIC_LOCATION}/'

STATICFILES_STORAGE = 'utils.storage_backends.StaticStorage'

if not LOCAL_SERVE_MEDIA_FILES:

PUBLIC_MEDIA_DEFAULT_ACL = None

PUBLIC_MEDIA_LOCATION = 'media/public'

MEDIA_URL = f'{AWS_S3_ENDPOINT_URL}/{PUBLIC_MEDIA_LOCATION}/'

DEFAULT_FILE_STORAGE = 'utils.storage_backends.PublicMediaStorage'

PRIVATE_MEDIA_DEFAULT_ACL = 'private'

PRIVATE_MEDIA_LOCATION = 'media/private'

PRIVATE_FILE_STORAGE = 'utils.storage_backends.PrivateMediaStorage'Criar uma pasta utils e o arquivo storage_backends.py e adicionar o conteúdo:

from django.conf import settings

from storages.backends.s3boto3 import S3Boto3Storage

class StaticStorage(S3Boto3Storage):

"""Used to manage static files for the web server"""

location = settings.STATIC_LOCATION

default_acl = settings.STATIC_DEFAULT_ACL

class PublicMediaStorage(S3Boto3Storage):

"""Used to store & serve dynamic media files with no access expiration"""

location = settings.PUBLIC_MEDIA_LOCATION

default_acl = settings.PUBLIC_MEDIA_DEFAULT_ACL

file_overwrite = False

class PrivateMediaStorage(S3Boto3Storage):

"""

Used to store & serve dynamic media files using access keys

and short-lived expirations to ensure more privacy control

"""

location = settings.PRIVATE_MEDIA_LOCATION

default_acl = settings.PRIVATE_MEDIA_DEFAULT_ACL

file_overwrite = False

custom_domain = FalseExemplo como usar no model:

import pathlib

from django.contrib.auth.models import AbstractUser

from django.db import models

from utils.storage_backends import PublicMediaStorage

def _profile_avatar_upload_path(instance, filename):

"""Provides a clean upload path for user avatar images

"""

file_extension = pathlib.Path(filename).suffix

return f'profiles/{instance.id}{file_extension}'

class CustomUser(AbstractUser):

profile_picture = models.ImageField('Foto de perfil', upload_to=_profile_avatar_upload_path, storage=PublicMediaStorage(), blank=True, null=True)

Depois de tudo configurado chegou a hora de configuramos as permissões no meu caso, quero liberar o s3 publico para conseguir acessar.

Crie o arquivo public.json com o conteúdo a baixo:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:PutObject",

"s3:PutObjectAcl",

"s3:GetObject",

"s3:GetObjectAcl",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::bucketeer-7585b93e-52a4-47dd-8126-122786b69f94",

"arn:aws:s3:::bucketeer-7585b93e-52a4-47dd-8126-122786b69f94/*"

],

"Effect": "Allow",

"Principal": "*"

}

]

}

Agora configure o aws cli, coloque as chaves que foram geradas no env da aplicação no heroku:

aws configureAgora que já configuramos, vamos precisamos subir o arquivo liberando todas as permissões:

aws s3api put-public-access-block --bucket <SEU_BUCKET> --public-access-block-configuration BlockPublicAcls=TRUE,IgnorePublicAcls=TRUE,BlockPublicPolicy=FALSE,RestrictPublicBuckets=FALSE

aws s3api put-bucket-policy --bucket <SEU_BUCKET> --policy file://public.jsonAgora você deve conseguir acessar todos os arquivos pelo S3 na aplicação, vamos subir os arquivos estáticos no servidor

python manage.py collectstatic --no-input

aws s3 cp .\static\ s3://<SEU_BUCKET>/static/ --recursiveForce outro deploy e veja se tudo esta ok na aplicação!